1) 데이터 불러오기

import os

from nltk import pos_tag, word_tokenize, sent_tokenize

base = "./scraping/"

corpus = list()

for _ in [_ for _ in os.listdir(base) if "IT" in _][:100]:

with open(base + _) as fp:

corpus.append(fp.read())

2) 토큰화

from konlpy.tag import Komoran

ma = Komoran()

tokens = list()

for doc in corpus:

for _ in word_tokenize(doc):

nonus = ma.nouns(_)

if len(nonus) > 1:

tokens.extend(nonus)

3) Frequency 가져오기

from nltk.probability import FreqDist

fd = FreqDist(tokens)

fd.most_common(10)

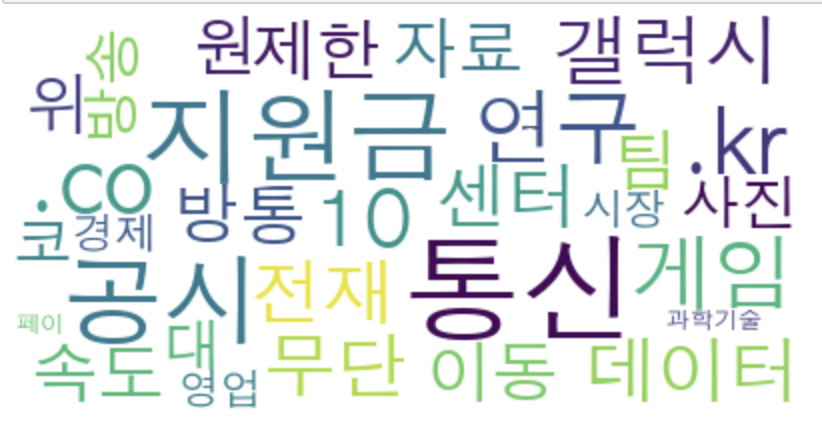

4) WordClude 만들기

from wordcloud import WordCloud

font = "/Library/Fonts/AppleGothic.ttf"

wc = WordCloud(font, max_words=30, background_color="white")

5) WordClude 출력하기

wc.generate_from_frequencies(fd)

wc.to_image()

'데이터 분석가 역량' 카테고리의 다른 글

| day 16 ] PreProcessing => Lexicon까지 정리 및 실습 (0) | 2019.05.22 |

|---|---|

| day 16 ] 전체 과정 정리(막 정리함...) (0) | 2019.05.22 |

| day 15 ] grammar (0) | 2019.05.21 |

| day 15 ] GitHub (0) | 2019.05.20 |

| day 13 ] pos (0) | 2019.05.16 |